Posted in Computer Hardware on 04 Jun 2024 at 19:14 UTC

Introduction

Earlier this year, I built a server using a Supermicro X9DRi-LN4F+ motherboard in a custom case (build thread), it is loud. It is very loud.

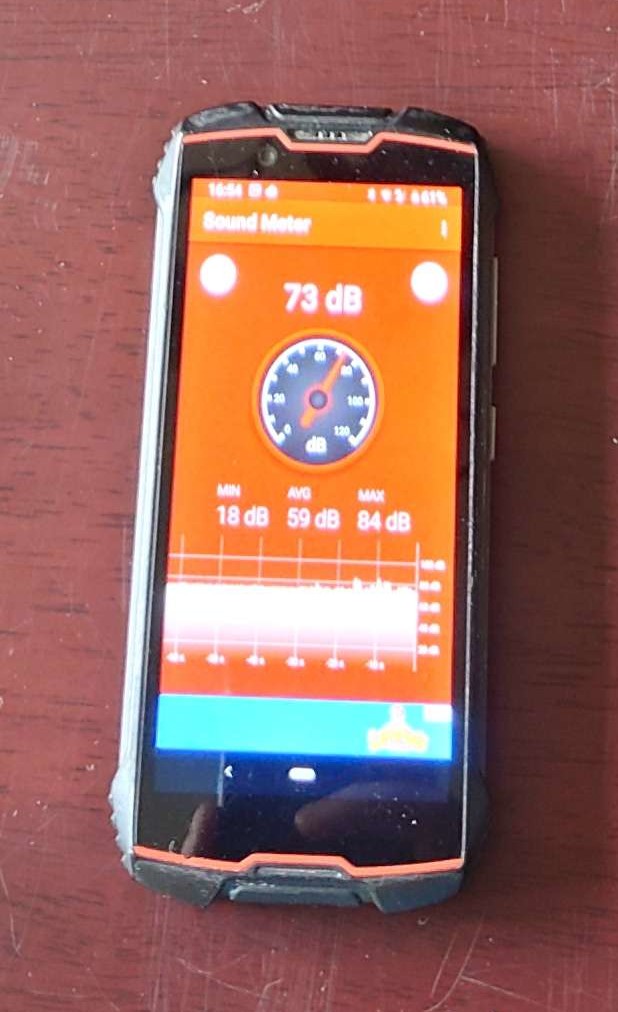

My phone running some random sound meter app is scientific, right?

Before starting this project, I took some baseline measurements - at idle, I recorded a noise level of 30 decibels in front of the server and both CPUs were hovering around 40C, after 10 minutes of running stress-ng --cpu 32, the recorded noise level was 73 decibels, the front CPU was hovering around 55°C and the rear CPU, ingesting the pre-heated air from the front CPU cooler was sitting around 75°C.

Not ideal.

I've always felt water cooling was unnecessary and overkill for computers... but with a server I can hear throughout the house whenever a CI job spins up on one of my projects, I'm willing to try it.

Assembling the water cooling components

There is practically no room inside the case of this server, so I was very constrained in what I could fit. I bought a pair of double 80mm radiators from eBay, which take pretty much the entire front of a 2U case, I also bought a Barrow LTPRK-04I M, which is a CPU water block with an integrated water pump and a basic water block for the other CPU (both radiators and water blocks to be in the same loop and served by the same pump).

First problem I had on assembling it was having enough room to connect pipes to the Barrow water block:

On the left are some fixed right-angle pipe fittings (also made by Barrow), I had to remove the cover from the pump to be able to screw them in, and after doing so, both were pointing into said cover, so connecting any further fittings on the end wouldn't be feasible and the cover wouldn't even fit.

So, I ordered some right-angle fittings with a swivel to come the next day from Amazon, and as shown in the picture on the right, they foul the lid by a couple of millimeters instead.

The Barrow fittings would work if only I could back them out a bit... maybe... thicker O rings?

Success! Probably. Hopefully they won't leak.

After that, it was relatively simple to plumb the rest of the system up:

And then lots and lots and lots and lots and lots of tedious drip feeding coolant into it and bleeding air out.

Initial testing

Initial results were promising - 25 decibels sound level at idle and both CPUs hovering around 37°C. I fired up stress-ng once more and let it run for a bit, the CPUs got up to ~70°C and stayed there, meanwhile I went into the other room where the computer was running and spotted a puddle on the motherboard(!) under the dripping filling tee... crap.

While mopping the puddle up, I realised it was smelling hot, so I went back and checked the watch sensors command I left running in the other room and the temperatures all looked okay... then Hogsy commented it smelled like it was burning and I noticed the timestamp on the screen was about 5 minutes old okaythatsnotgoodI'mgonnapullthepowercableoutnow.

Double crap... I hope nothing's damaged. While it was cooling down I mopped up the remainder of the puddle and fiddled with the cable tie on the leaking joint.

A bit later I switched it back on and it didn't beep, and wasn't responding over the network. Oh fuck please don't be dead. I connected the BMC network port up on the bench, and that responded at least, so I opened the remote KVM console, powered on and...

I've never been so relieved to see a POST screen, it hung with the POST code indicating a memory fault, but the motherboard wasn't completely dead at least.

I guess I might as well reseat the memory in case somehow some of it got dislodged? *fiddle* *fiddle* OW! That RAM card is hot!

Two of the RAM cards were too hot to touch, in fact, and after pulling them out, the machine POSTed okay. I tried with them in again, and it hung again, with an IR thermometer I measured both of the faulty RAM cards rapidly climbing up to 70°C while the other ones in the machine only went to 40°C.

I was running the stress test with the lid off, and no real airflow over the internals of the machine, so I'm not sure if the RAM just happened to die then, or if it got cooked by the lack of cooling.

*sigh*

At least DDR3 memory is old and reasonably cheap to replace.

I placed another couple of 80mm fans from my parts pile in front of it, placed the lid on top to encourage air flow and nervously started another stress test.

After 10 minutes of running the stress test, both CPUs were hovering between 50&dec;C and 55°C, and there were no burning smells this time. I periodically lifted the lid to check the RAM modules during the test, and all hovered around the 40°C mark.

Yay!

Case fabrication/alteration

Some "new" RAM from was eBay ordered to replace the 32GB I probably cooked and on to fabricating a new front panel for the server so the radiators and fans actually have somewhere to be mounted...

To highlight how tight the clearances above/below the radiators are, this lip at the front of the case prevented them from fitting, and had to be removed (half-assedly, using a nibbler and pliers because I was too lazy to dismantle the server so I could use more appropriate cutting tools).

Oh, even the lip on the lid won't fit under the front due to the radiator... okay... air grinder time.

My inability to cut a straight line strikes again... at least its not visible when racked up.

The hole under the power button was intended for a pair of USB ports... but I cut it in the wrong place and the ports I bought wouldn't fit in the space left next to the radiator anyway... so I guess I'll get something printed on a sticker to cover it up.

As a finishing touch on the inside, I cut up a scrap of aluminium angle to make a pipe support since the hoses were sagging down and resting on the RAM cards.

Results

| At idle | 10 minute load test | |||||

|---|---|---|---|---|---|---|

| CPU temperature | RAM temperature | Noise | CPU temperature | RAM temperature | Noise | |

| Original configuration | 40°C | No reading | 30dB | 55°C - 75°C | No reading | 73dB |

| Water cooled | 29°C | 37°C - 46°C | 56dB | 52°C | 51°C - 59°C | 56dB |

So, at idle the CPUs are practically room temperature and although a bit noisier, its not too bad - its not too loud amongst the other stuff in the rack. When load testing, both CPUs are now hanging around ~50°C and the machine isn't any louder than at idle - kind of surprising, but it looks like all the fans are running at full speed from idle.

I discovered that the RAM modules do in fact have temperature sensors, and although the Linux sensors command doesn't see them, the onboard BMC does, and exposes them over IPMI... so naturally I hooked that up to Prometheus and Grafana to get pretty graphs (and alerting capability for overheating, failed fans or out-of-spec voltages).

The 4 fans on the front are all Arctic F8 fans, two from the previous configuration and two new ones which I ignorantly bought without much research; the F8 fans are "airflow" fans, while the ideal fan for mounting in a "restricted" position (i.e. anywhere except a case exhaust) is a "pressure" fan, like the Arctic P8, which has a lower airflow rating than the F8, but a higher static pressure and has the same rated noise level.

I don't have a set of P8 fans handy for testing, and the F8s are cooling the system well enough, so for the time being they will stay. If I have problems in the future, I might try swapping them for P8s or even P8 Max fans (much higher air flow and static pressure, but louder.

Final thoughts

This meandering project grew out of an impulse buy of a cheap motherboard bundle from eBay... by the time you add in the case, power supply, storage, network cards, multiple different heatsink/fan/water cooling configurations and materials I used in fabricating custom parts, I probably could've bought one or two faster servers, complete and ready to just stick in the rack.

But still, I don't think I regret going down this path, even with the money spent and shoddy fabrication in places on my part.

Comments

No comments have been posted